The Servo Book

Servo is a web browser engine written in the Rust programming language, and currently developed on 64-bit Linux, 64-bit macOS, 64-bit Windows, and Android.

Work is still ongoing to make Servo consumable as a webview library. Currently, the recommended way to run Servo as a browser is via servoshell, our winit and egui-based demo browser. If you’d like to embed Servo in your own application, consider using tauri-runtime-verso, a custom Tauri runtime, or servo-gtk, a GTK4-based web browser widget.

This book will be your guide to building and running servoshell, hacking on and contributing to Servo, the architecture of Servo, and how to consume Servo and its libraries.

This book is a work in progress! In the table of contents, * denotes chapters that were recently added or imported from older docs, and still need to be copyedited or reworked.

Contributions are always welcome. Click the pencil button in the top right of each page to propose changes, or go to servo/book for more details.

Need help?

Join the Servo Zulip if you have any questions. Everyone is welcome!

Getting servoshell

The Servo project ships a small test browser called servoshell, which allows testing Servo. servoshell is not a fully fledged browser, but is meant as a way to see how Servo works on your system and to run tests. You can download the latest prebuilt version of servoshell from the Downloads section on our website. You can also download servoshell using the links below:

- Windows Installer (sha256)

- Windows ZIP (sha256)

- macOS (x86) (sha256)

- macOS (AArch64) (sha256)

- Linux (x86) (sha256)

- Android (AArch64) (sha256)

- OpenHarmony (AAarch64) (sha256)

Older nightly releases are available in the servo/servo-nightly-builds repo.

Running servoshell

Assuming you’re in the directory containing servo, you can run servoshell with:

$ ./servo [url] [options]

Use --help to list the available command line options:

$ ./servo --help

Enabling experimental web platform features

Servo has in-progress support for many web platform features.

Some are not complete enough to be enabled by default, but you can try them out using a preference setting.

For a list of these features and the preference used to enable them, see [Experimental features](../design-documentation/experimental-features.md].

In addition, you can enable a useful subset of these features with the --enable-experimental-web-platform-features command-line argument or via the servoshell user interface.

Keyboard shortcuts

- Ctrl+

Q(⌘Q on macOS) exits servoshell - Ctrl+

L(⌘L on macOS) focuses the location bar - Ctrl+

R(⌘R on macOS) reloads the page - Alt+

←(⌘← on macOS) goes back in history - Alt+

→(⌘→ on macOS) goes forward in history - Ctrl+

=(⌘= on macOS) increases the page zoom - Ctrl+

-(⌘- on macOS) decreases the page zoom - Ctrl+

0(⌘0 on macOS) resets the page zoom - Esc exits fullscreen mode

Troubleshooting

servoshell should run on most systems without any need to install dependencies. If you are on Linux and servoshell reports that a shared library is missing, ensure that you have the following packages installed:

GStreamer≥ 1.18gst-plugins-base≥ 1.18gst-plugins-good≥ 1.18gst-plugins-bad≥ 1.18gst-plugins-ugly≥ 1.18libXcursorlibXrandrlibXilibxkbcommonvulkan-loader

Getting the code

To make changes to Servo or build servoshell yourself, clone the main repo with Git:

$ git clone https://github.com/servo/servo.git

$ cd servo

Servo’s repository is pretty big! If you have an unreliable network connection or limited disk space, consider making a shallow clone.

Once you’ve cloned the Servo source code, the next step is to install dependencies and build Servo.

Building Servo

This page contains more detailed information about building Servo. You might want to skip straight to instructions for building Servo on your system:

mach

You need to use mach to build Servo.

mach is a Python program that does plenty of things to make working on Servo easier, like building and running Servo, running tests, and updating dependencies.

Windows users: you will need to replace ./mach with .\mach in the commands in this book if you are using cmd.

Use --help to list the subcommands, or get help with a specific subcommand:

$ ./mach --help

$ ./mach build --help

When you use mach to run another program, such as servoshell, that program may have its own options with the same names as mach options.

You can use --, surrounded by spaces, to tell mach not to touch any subsequent options and leave them for the other program.

$ ./mach run --help # Gets help for `mach run`.

$ ./mach run -d --help # Still gets help for `mach run`.

$ ./mach run -d -- --help # Gets help for the debug build of servoshell.

This also applies to the Servo unit tests, where there are three layers of options: mach options, cargo test options, and libtest options.

$ ./mach test-unit --help # Gets help for `mach test-unit`.

$ ./mach test-unit -- --help # Gets help for `cargo test`.

$ ./mach test-unit -- -- --help # Gets help for the test harness (libtest).

Work is ongoing to make it possible to build Servo without mach. Where possible, consider whether you can use native Cargo functionality before adding new functionality to mach.

Build profiles

There are three main build profiles, which you can build and use independently of one another:

- debug builds, which allow you to use a debugger (lldb) (selected by default if no profile is passed)

- release builds, which are slower to build but more performant

- production builds, which are used for official releases only

| debug | release | production | |

|---|---|---|---|

| mach option | -d |

-r |

--prod |

| optimised? | no | yes | yes, more than in release |

| maximum RUST_LOG level | trace |

info |

info |

| debug assertions? | yes | yes(!) | no |

| debug info? | yes | no | no |

| symbols? | yes | no | yes |

| finds resources in current working dir? |

yes | yes | no(!) |

There are also two special variants of production builds for performance-related use cases:

production-strippedbuilds are ideal for benchmarking Servo over time, with debug symbols stripped for faster initial startupprofilingbuilds are ideal for profiling and troubleshooting performance issues; they behave like a debug or release build, but have the same performance as a production build

| production | production-stripped | profiling | |

|---|---|---|---|

mach --profile |

production |

production-stripped |

profiling |

| debug info? | no | no | yes |

| symbols? | yes | no | yes |

| finds resources in current working dir? |

no | no | yes(!) |

You can change these settings in a servobuild file (see servobuild.example) or in the root Cargo.toml.

Optional build settings

Some build settings can only be enabled manually:

- AddressSanitizer builds are enabled with

./mach build --with-asan - ThreadSanitizer builds are enabled with

./mach build --with-tsan - crown linting is recommended when hacking on DOM code, and is enabled with

./mach build --use-crown - SpiderMonkey debug builds are enabled with

./mach build --debug-mozjs, or[build] debug-mozjs = truein your servobuild file

A full list of arguments can be seen by running ./mach build --help.

Running servoshell

When you build it yourself, servoshell will be in target/debug/servo or target/release/servo.

You can run it directly as shown above, but we recommend using mach instead.

To run servoshell with mach, replace ./servo with ./mach run -d -- or ./mach run -r --, depending on the build profile you want to run.

For example, both of the commands below run the debug build of servoshell with the same options:

$ target/debug/servo https://demo.servo.org

$ ./mach run -d -- https://demo.servo.org

Building for Linux

- Install

curl:- Arch:

sudo pacman -S --needed curl - Debian, Ubuntu:

sudo apt install curl - Fedora:

sudo dnf install curl - Gentoo:

sudo emerge net-misc/curl

- Arch:

- Install

uv:curl -LsSf https://astral.sh/uv/install.sh | sh - Install

rustup:curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh - Restart your shell to make sure

cargois available - Install the other dependencies:

./mach bootstrap - Build servoshell:

./mach build

Unsupported Distributions

If ./mach boostrap reports that your distribution is unsupported, then you will need to install dependencies manually.

Below you will find instructions for installing build dependencies on a variety of types of distributions.

If your distribution is not listed, it’s recommended that you try to adapt the list for the package names on your system.

Updates to this list are very welcome!

Arch and Manjaro

-

sudo pacman -S --needed curl -

sudo pacman -S --needed base-devel git mesa cmake libxmu pkg-config ttf-fira-sans harfbuzz ccache llvm clang autoconf2.13 gstreamer gstreamer-vaapi gst-plugins-base gst-plugins-good gst-plugins-bad gst-plugins-ugly vulkan-icd-loader wireshark-cli

Debian-like

(including elementary OS, KDE neon, Linux Mint, Pop!_OS, Raspbian, TUXEDO OS, Ubuntu)

sudo apt install curl

sudo apt install build-essential ccache clang cmake curl g++ git gperf libdbus-1-dev libfreetype6-dev libgl1-mesa-dri libgles2-mesa-dev libglib2.0-dev gstreamer1.0-plugins-good gstreamer1.0-plugins-bad libgstreamer-plugins-bad1.0-dev gstreamer1.0-plugins-ugly gstreamer1.0-plugins-base libgstreamer-plugins-base1.0-dev gstreamer1.0-libav libgstrtspserver-1.0-dev gstreamer1.0-tools libges-1.0-dev libharfbuzz-dev liblzma-dev libudev-dev libunwind-dev libvulkan1 libx11-dev libxcb-render0-dev libxcb-shape0-dev libxcb-xfixes0-dev libxmu-dev libxmu6 libegl1-mesa-dev llvm-dev m4 xorg-dev libxkbcommon0 libxkbcommon-x11-0

Note: For Ubuntu-based distributions, ensure that you also include the libgstreamer-plugins-good1.0-dev package alongside the packages listed above.

Fedora-like

sudo dnf install cabextract ccache clang clang-libs cmake dbus-devel expat-devel fontconfig-devel freetype-devel gcc-c++ glib2-devel gperf gstreamer1-devel gstreamer1-plugins-bad-free-devel gstreamer1-plugins-base-devel gstreamer1-plugins-good gstreamer1-plugins-ugly-free harfbuzz-devel libX11-devel libXcursor-devel libXi-devel libXmu-devel libXrandr-devel libjpeg-turbo libjpeg-turbo-devel libtool libunwind-devel libxkbcommon libxkbcommon-x11 llvm mesa-libEGL-devel mesa-libGL-devel ncurses-devel python3-devel rpm-build ttmkfdir vulkan-loader zlib-ng

Gentoo-like

sudo emerge net-misc/curl media-libs/freetype media-libs/mesa dev-util/gperf dev-libs/openssl media-libs/harfbuzz dev-util/ccache sys-libs/libunwind x11-libs/libXmu x11-base/xorg-server sys-devel/clang media-libs/gstreamer media-libs/gst-plugins-base media-libs/gst-plugins-good media-libs/gst-plugins-bad media-libs/gst-plugins-ugly media-libs/vulkan-loader

openSUSE

sudo zypper install libX11-devel libexpat-devel Mesa-libEGL-devel Mesa-libGL-devel cabextract cmake dbus-1-devel fontconfig-devel freetype-devel gcc-c++ git glib2-devel gperf harfbuzz-devel libXcursor-devel libXi-devel libXmu-devel libXrandr-devel libopenssl-devel rpm-build ccache llvm-clang libclang autoconf213 gstreamer-devel gstreamer-plugins-base-devel gstreamer-plugins-good gstreamer-plugins-bad-devel gstreamer-plugins-ugly vulkan-loader libvulkan1

Void Linux

sudo xbps-install bzip2-devel cabextract ccache clang cmake cmake dbus-devel expat-devel fontconfig-devel freetype-devel gcc glib-devel glu-devel gperf gst-plugins-bad1-devel gst-plugins-base1-devel gst-plugins-good1 gst-plugins-ugly1 gstreamer1-devel harfbuzz-devel libtool libunwind-devel libX11-devel libXcursor-devel libXi-devel libxkbcommon libxkbcommon-x11 libXmu-devel libXrandr-devel MesaLib-devel ncurses-devel pkg-config vulkan-loader

Troubleshooting

Be sure to look at the General Troubleshooting section if you have trouble with your build and your problem is not listed below.

build: /lib/x86_64-linux-gnu/libc.so.6: version `GLIBC_2.39' not found

This workaround is applicable when building Servo using nix in Linux distributions other than NixOS.

The error indicates that the version of glibc included in the distribution is older than the one in nixpkgs.

At the end of the shell.nix, change the line if ! [ -e /etc/NIXOS ]; then to if false; then to disable the support in shell.nix for producing binary artifacts that don’t depend on the nix store.

Building for macOS

- Download and install Xcode and

brew. - Install

uv:curl -LsSf https://astral.sh/uv/install.sh | sh - Install

rustup:curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh - Restart your shell to make sure

cargois available - Install the other dependencies:

./mach bootstrap - Build servoshell:

./mach build

Troubleshooting

Be sure to look at the General Troubleshooting section if you have trouble with your build.

Building for Windows

- Download

uv,choco, andrustup- Be sure to select Quick install via the Visual Studio Community installer

- In the Visual Studio Installer, ensure the following components are installed:

- Windows 10/11 SDK (anything >= 10.0.19041.0) (

Microsoft.VisualStudio.Component.Windows{10, 11}SDK.{>=19041}) - MSVC v143 - VS 2022 C++ x64/x86 build tools (Latest) (

Microsoft.VisualStudio.Component.VC.Tools.x86.x64) - C++ ATL for latest v143 build tools (x86 & x64) (

Microsoft.VisualStudio.Component.VC.ATL)

- Windows 10/11 SDK (anything >= 10.0.19041.0) (

- Restart your shell to make sure

cargois available - Install the other dependencies:

.\mach bootstrap - Build servoshell:

.\mach build

We don’t recommend having more than one version of Visual Studio installed. Servo will try to search for the appropriate version of Visual Studio, but having only a single version installed means fewer things can go wrong.

Troubleshooting

Be sure to look at the General Troubleshooting section if you have trouble with your build and your problem is not listed below.

Cannot run mach in a path on a case-sensitive file system on Windows.

- Open a command prompt or PowerShell as administrator (Win+X, A)

- Disable case sensitivity for your Servo repo:

fsutil file SetCaseSensitiveInfo X:\path\to\servo disable

Could not find DLL dependency: api-ms-win-crt-runtime-l1-1-0.dllDLL file `api-ms-win-crt-runtime-l1-1-0.dll` not found!

Find the path to Redist\ucrt\DLLs\x64\api-ms-win-crt-runtime-l1-1-0.dll, e.g. C:\Program Files (x86)\Windows Kits\10\Redist\ucrt\DLLs\x64\api-ms-win-crt-runtime-l1-1-0.dll.

Then set the WindowsSdkDir environment variable to the path that contains Redist, e.g. C:\Program Files (x86)\Windows Kits\10.

thread 'main' panicked at 'Unable to find libclang: "couldn\'t find any valid shared libraries matching: [\'clang.dll\', \'libclang.dll\'], set the `LIBCLANG_PATH` environment variable to a path where one of these files can be found (invalid: [(C:\\Program Files\\LLVM\\bin\\libclang.dll: invalid DLL (64-bit))])"', C:\Users\me\.cargo\registry\src\...

rustup may have been installed with the 32-bit default host, rather than the 64-bit default host needed by Servo.

Check your default host with rustup show, then set the default host:

> rustup set default-host x86_64-pc-windows-msvc

ERROR: GetShortPathName returned a long path name: `C:/PROGRA~2/Windows Kits/10/`. Use `fsutil file setshortname' to create a short name for any components of this path that have spaces.

SpiderMonkey (mozjs) requires 8.3 filenames to be enabled on Windows (#26010).

- Open a command prompt or PowerShell as administrator (Win+X, A)

- Enable 8.3 filename generation:

fsutil behavior set disable8dot3 0 - Uninstall and reinstall whatever contains the failing paths, such as Visual Studio or the Windows SDK — this is easier than adding 8.3 filenames by hand

= note: lld-link: error: undefined symbol: __std_search_1 >>> referenced by D:\a\mozjs\mozjs\mozjs-sys\mozjs\intl\components\src\NumberFormatterSkeleton.cpp:157

Issues like this can occur when mozjs is upgraded, as the update may depend on newer MSVC (remember we require “Latest” in set up your environment!). To resolve it, launch the Visual Studio Installer and apply all available updates.

Building for NixOS

- Install Nix, the package manager. The easiest way is to use the installer, with either the multi-user or single-user installation (your choice).

- Tell

machto use Nix:export MACH_USE_NIX= - Type

nix-shellto enter a shell with all of the necessary tools and dependencies. - Install the other dependencies:

./mach bootstrap - Build servoshell:

./mach build

Troubleshooting

Be sure to look at the General Troubleshooting section if you have trouble with your build and your problem is not listed below.

error: getting status of /nix/var/nix/daemon-socket/socket: Permission denied

If you get this error and you’ve installed Nix with your system package manager:

- Add yourself to the

nix-usersgroup - Log out and log back in

error: file 'nixpkgs' was not found in the Nix search path (add it using $NIX_PATH or -I)

This error is harmless, but you can fix it as follows:

- Run

sudo nix-channel --add https://nixos.org/channels/nixpkgs-unstable nixpkgs - Run

sudo nix-channel --update

Building on WSL

Servo can be built on WSL as if you are building on any other Linux distribution. To run non-headless servo shell on WSL, you will most likely need to be on Windows 10 Build 19044+ or Windows 11 as access to WSL v2 is needed for GUI.

- Setup WSL v2. See Microsoft’s guidelines for setting up GUI apps in WSL.

- Follow the instructions for building and running Servo on the WSL distribution that you are using (e.g. Ubuntu, OpenSuse, etc.)

WSL v2 has the corresponding adaptors to display Wayland and X11 applications, though it may not always work out of the box with Servo.

Troubleshooting

Be sure to look at the General Troubleshooting section if you have trouble with your build and your problem is not listed below.

Failed to create event loop

If you encounter an immediate crash after running that points to winit and its platform implementation, setting WAYLAND_DISPLAY='' stops the crash.

Failed to create events loop: Os(OsError { line: 81, file: "/home/astra/.cargo/registry/src/index.crates.io-1949cf8c6b5b557f/winit-0.30.9/src/platform_impl/linux/wayland/event_loop/mod.rs", error: WaylandError(Connection(NoCompositor)) }) (thread main, at ports/servoshell/desktop/cli.rs:34)

0: servoshell::backtrace::print

at /home/astra/workspace/servo/ports/servoshell/backtrace.rs:18:5

...

18: main

19: <unknown>

20: __libc_start_main

21: _start

Servo was terminated by signal 11

Either export the variable, or set it before running:

export WAYLAND_DISPLAY=''

./mach run

# or

WAYLAND_DISPLAY='' ./mach run

# optionally save the variable long term to your .bashrc profile

echo 'export WAYLAND_DISPLAY=""' >> ~/.bashrc

Library libxkbcommon-x11.so could not be loaded

This may happen because your distro have not installed the required library. Run the following command (assuming you are using WSL Debian/Ubuntu, adjust accordingly if you use other distro):

sudo apt install libxkbcommon-x11-0

error: failed to run build command...

if you encounter an error like below while running ./mach build on WSL, it is possibly caused by out of memory (OOM) error because your WSL does not have enough RAM to build servo.

You will need to increase memory usage limit and swapfile on WSL, or upgrade your RAM to fix it.

yourusername@PC:~/servo$ ./mach build

No build type specified so assuming `--dev`.

Building `debug` build with crown disabled (no JS garbage collection linting).

...

Compiling script v0.0.1 (/home/yourusername/servo/components/script)

error: failed to run build command...

Caused by:

process didn't exit successfully: `/home/yourusername/.rustup/toolchains/1.91.0-x86_64-unknown-linux-gnu/bin/rustc --crate-name script --edition=2024 components/script/lib.rs...

...

warning: build failed, waiting for other jobs to finish...

Create C:/user/yourusername/.wslconfig then insert the following:

[wsl2]

memory=6GB

swap=16GB

swapfile=C:\\Users\\yourusername\\swapfile.vhdx

Save the file and restart WSL on powershell:

wsl --shutdown

Building for Android

- Ensure that the following environment variables are set:

ANDROID_SDK_ROOTANDROID_NDK_ROOT:$ANDROID_SDK_ROOT/ndk/28.2.13676358/ANDROID_SDK_ROOTcan be any directory (such as~/android-sdk). All of the Android build dependencies will be installed there.

- Install the latest version of the Android command-line tools to

$ANDROID_SDK_ROOT/cmdline-tools/latest. - Run the following command to install the necessary components:

sudo $ANDROID_SDK_ROOT/cmdline-tools/latest/bin/sdkmanager --install \ "build-tools;34.0.0" \ "emulator" \ "ndk;28.2.13676358" \ "platform-tools" \ "platforms;android-33" \ "system-images;android-33;google_apis;arm64-v8a" - Run

./mach build --android -r

Note: This will install dependencies and build Servo for the aarch64-linux-android platform.

In order to build Servo for other Android targets, ensure that you install the appropriate system images via sdkmanager and pass --target with a Rust compatible target to mach when building instead of --android.

Note: If you are not using Android Studio on macOS, you will need to install a JDK.

Use brew install opendjdk@21 to install a usable version; newer versions cause java.lang.IllegalArgumentException: 25 when running the gradle build step during the Servo build.

Note: If you are using Nix, you don’t need to install the tools or set up the ANDROID_SDK_ROOT and ANDROID_NDK_ROOT environment variables manually.

Simply enable the Android build support running:

export SERVO_ANDROID_BUILD=1

in the shell session before invoking ./mach commands

Building with Android Studio

It’s recommended to build Servo via the command-line for Android, but you can also build it using Android Studio, if you prefer to use the Android IDE.

- Install Android Studio by downloading the appropriate version from the official website and following the installation instructions.

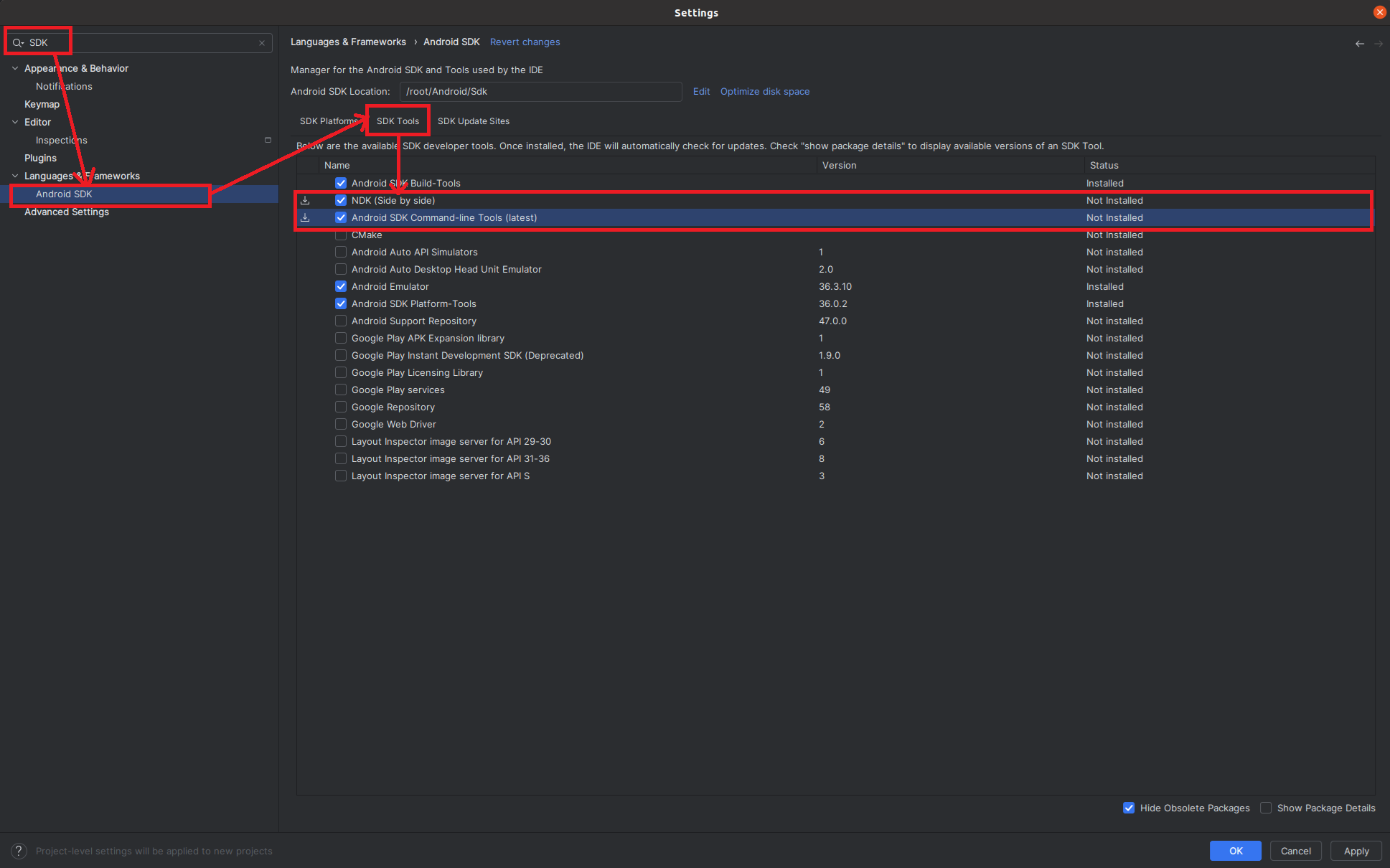

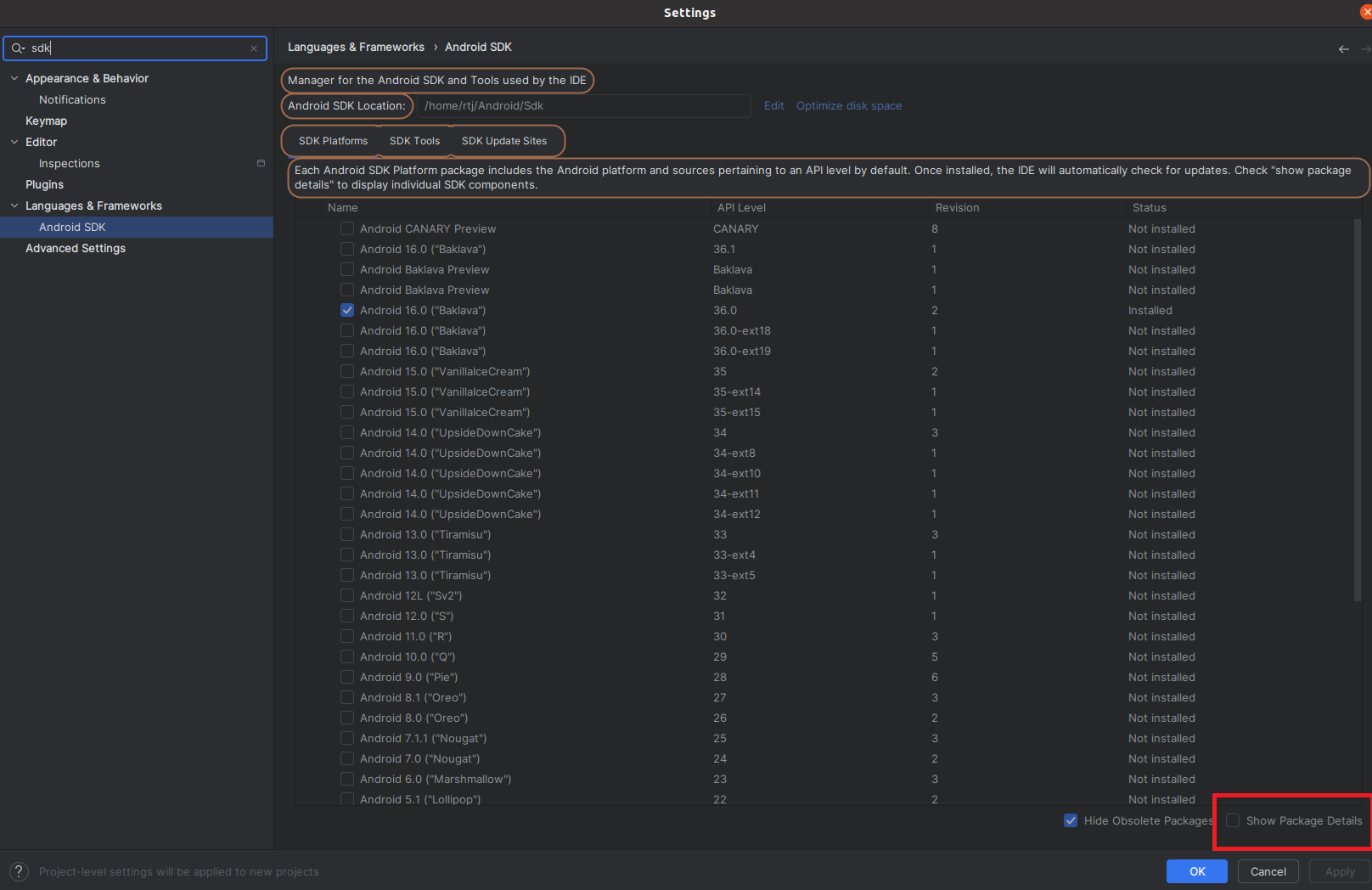

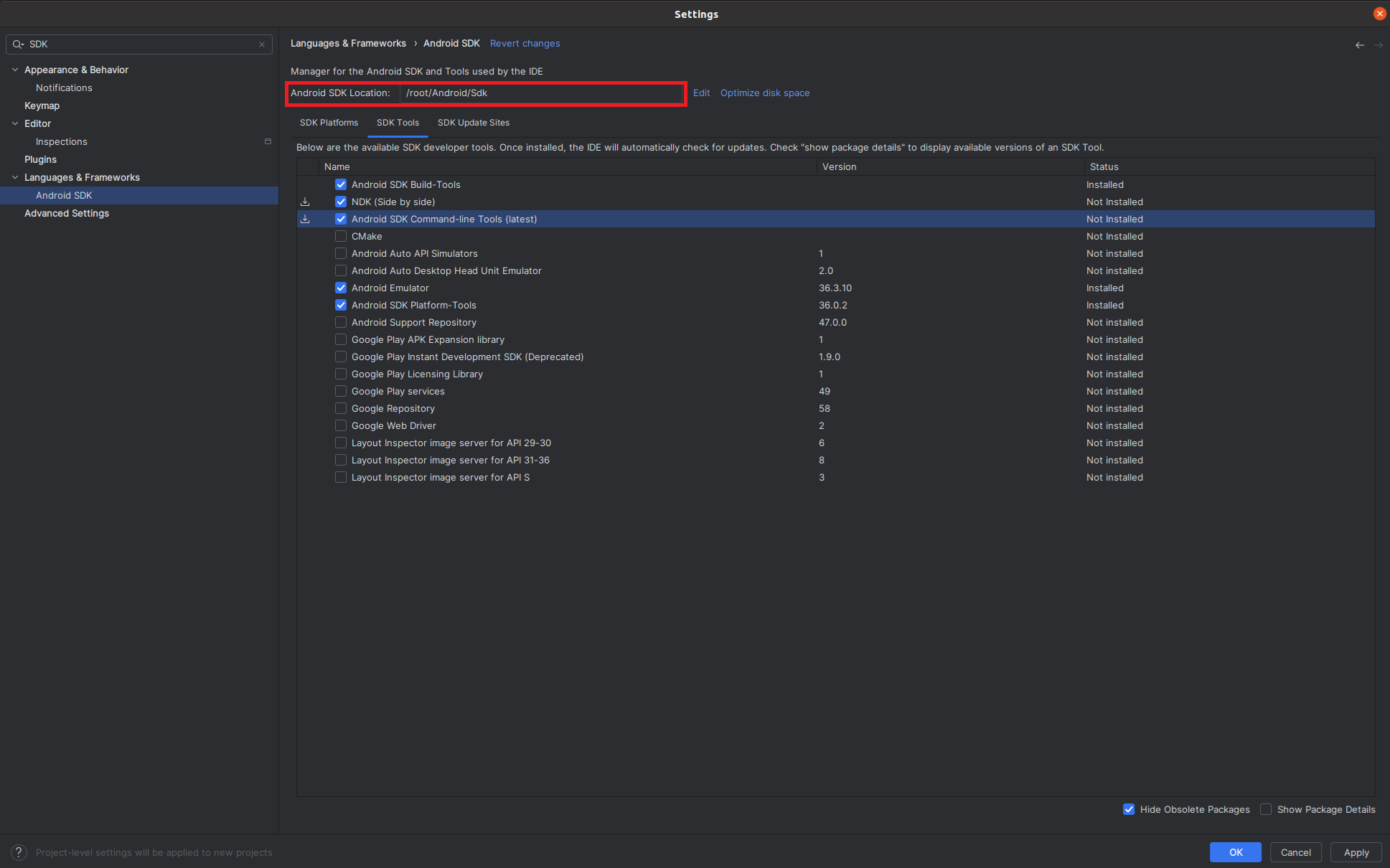

- In order to install additional tools run Android Studio, go to Settings and type

sdkon the search bar. - Select the SDK:

- Click Android SDK`

- Navigate to SDK Tools

- Check Android SDK Command-line tools (latest):

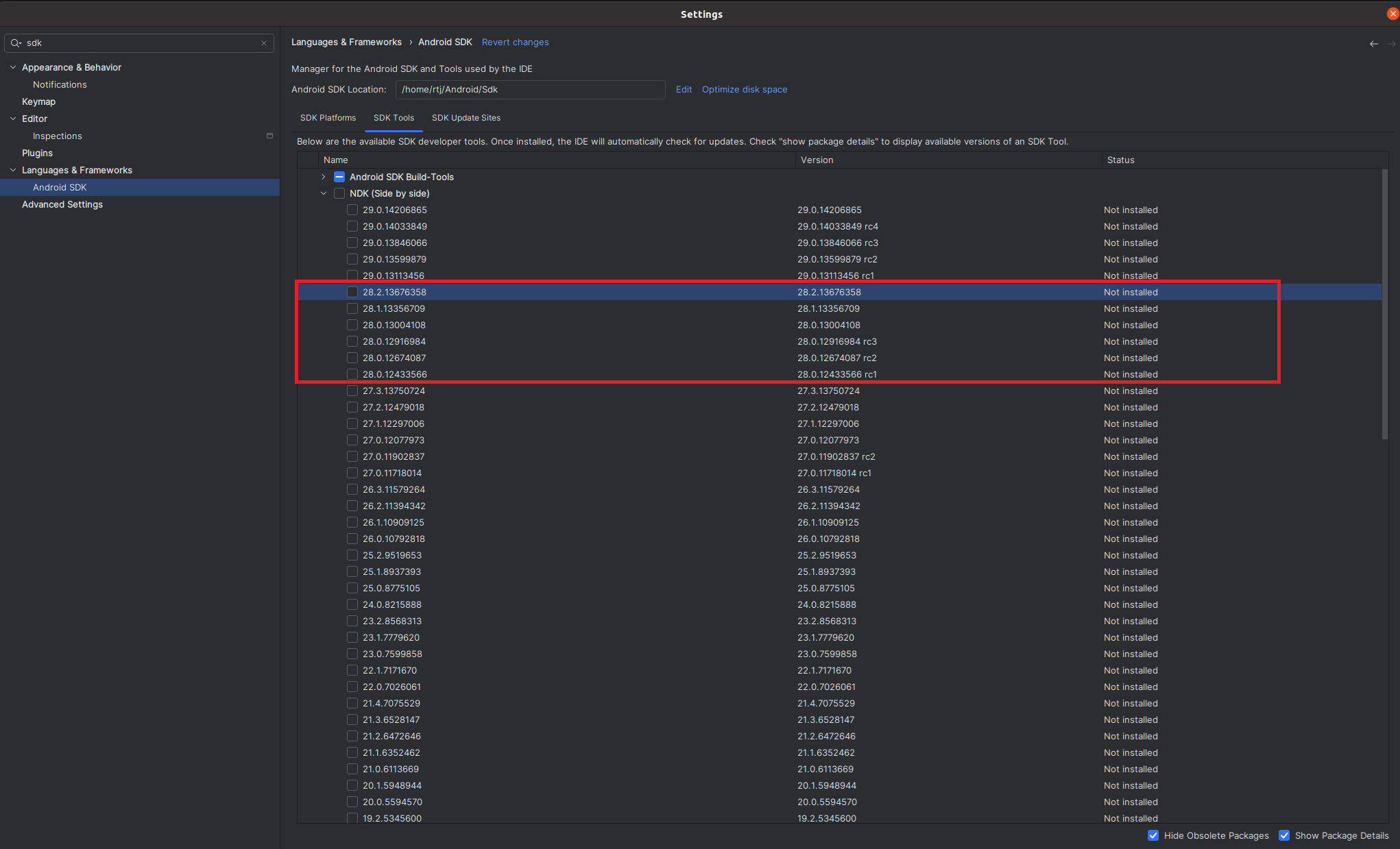

- Select the NDK. Note that Servo requires version 28 of the NDK.

- In the SDK Tools section, select NDK (side by side)

- Click show package details:

- Select the latest version of NDK 28:

- Click Ok to install both the NDK and SDK.

- Find the path to the SDK under Languages & Frameworks then Android SDK Location:

.

Then, ensure that the following environment variables are set:

.

Then, ensure that the following environment variables are set:

ANDROID_SDK_ROOT: The path found above.ANDROID_NDK_ROOT:$ANDROID_SDK_ROOT/ndk/28.2.13676358/

- Run

./mach build --android -r

Running in the emulator

- Create a new AVD image to run Servo:

$ANDROID_SDK_ROOT/cmdline-tools/latest/bin/avdmanager create avd \ --name "Servo" \ --device "pixel" \ --package "system-images;android-33;google_apis;arm64-v8a" \ --tag "google_apis" \ --abi "arm64-v8a" - Enable the hardware keyboard.

Open

~/.android/avd/Servo.avd/config.iniand changehw.keyboard = notohw.keyboard = yes. - Launch the emulator

$ANDROID_SDK_ROOT/emulator/emulator -avd servo -netdelay none -no-snapshot - Install Servo on the emulator:

./mach install -r --android - Start Servo by tapping the Servo icon on your launcher screen.

Installing on a physical device

- Set up your device for development.

- Build Servo as described above, ensuring that you are building for the appropriate target for your device.

- Install Servo to your device by running:

./mach install -r --android - Start Servo by tapping the Servo icon on your launcher screen or run:

./mach run --android https://www.servo.org/

You can request a force-stop of Servo by running:

adb shell am force-stop org.servo.servoshell/org.servo.servoshell.MainActivity

If the above doesn’t work, try this:

adb shell am force-stop org.servo.servoshell

You can uninstall Servo by running:

adb uninstall org.servo.servoshell

Troubleshooting

Be sure to look at the General Troubleshooting section if you have trouble with your build.

Building for OpenHarmony

Get the OpenHarmony tools

Building for OpenHarmony requires the following:

- The OpenHarmony SDK. This is sufficient to compile servo as a shared library for OpenHarmony.

- The

hvigorbuild tool to compile an application into an app bundle and sign it.

Setting up the OpenHarmony SDK

The OpenHarmony SDK is required to compile applications for OpenHarmony. The minimum version of SDK that Servo currently supports is v5.0.2 (API-14).

Downloading via DevEco Studio

DevEco Studio is an IDE for developing applications for HarmonyOS NEXT and OpenHarmony. It supports Windows and macOS. You can manage installed OpenHarmony SDKs by clicking File->Settings and selecting “OpenHarmony SDK”. After setting a suitable installation path, you can select the components you want to install for each available API version. DevEco Studio will automatically download and install the components for you.

Manual installation of the OpenHarmony SDK (e.g. on Linux)

- Go to the OpenHarmony release notes and select the version you want to compile for.

- Scroll down to the section “Acquiring Source Code from Mirrors” and click the download link for the version of “Public SDK package for the standard system” matching your host system.

- Extract the archive to a suitable location.

- Switch into the SDK folder with

cd <sdk_folder>/<your_operating_system>. - Create a sub-folder with the same name as the API version (e.g 14 for SDK v5.0.2) and switch into it.

- Unzip the zip files of the individual components into the folder created in the previous step. Preferably use the

unzipcommand on the command-line, or manually ensure that the unzipped bundles are called e.g.nativeand notnative-linux-x64-5.x.y.z.

The following snippet can be used as a reference for steps 4-6:

cd ~/ohos-sdk/linux

for COMPONENT in "native toolchains ets js previewer" do

echo "Extracting component ${COMPONENT}"

unzip ${COMPONENT}-*.zip

API_VERSION=$(cat ${COMPONENT}/oh-uni-package.json | jq -r '.apiVersion')

mkdir -p ${API_VERSION}

mv ${COMPONENT} "${API_VERSION}/"

done

On windows, it is recommended to use 7zip to unzip the archives, since the windows explorer unzip tool is extremely slow.

Manual installation of the HarmonyOS NEXT commandline tools

The HarmonyOS NEXT commandline tools contain the OpenHarmony SDK and the following additional tools:

codelinter(linter)hstack(crash dump stack analysis tool)hvigor/hvigorw(build tool)ohpm(package manager)

Currently, the commandline tools package is not publicly available and requires a chinese Huawei account to download.

Manual installation of hvigor without the commandline tools

hvigor (not the wrapper hvigorw) is also available via npm.

-

Install the same nodejs version as the commandline-tools ship. For HarmonyOS NEXT Node 18 is shipped. Ensure that the

nodebinary is in PATH. -

Install Java using the recommended installation method for your OS. The build steps are known to work with OpenJDK v17, v21 and v23. On macOS, if you install Homebrew’s OpenJDK formula, the following additional command may need to be run after the installation:

# For the system Java wrappers to find this JDK, symlink it with sudo ln -sfn $HOMEBREW_PREFIX/opt/openjdk/libexec/openjdk.jdk /Library/Java/JavaVirtualMachines/openjdk.jdk -

Edit your

.npmrcto contain the following line:@ohos:registry=https://repo.harmonyos.com/npm/ -

Install hvigor and the hvigor-ohos-plugin. This will create a

node_modulesdirectory in the current directory.npm install @ohos/hvigor npm install @ohos/hvigor-ohos-plugin -

Now you should be able to run

hvigor.jsin your OpenHarmony project to build a hap bundle:/path/to/node_modules/@ohos/hvigor/bin/hvigor.js assembleHap

Configuring hdc on Linux

hdc is the equivalent to adb for OpenHarmony devices.

You can find it in the toolchains directory of your SDK.

For convenience purposes, you might want to add toolchains to your PATH.

Among others, hdc can be used to open a shell or transfer files between a device and the host system.

hdc needs to connect to a physical device via usb, which requires the user has permissions to access the device.

It’s recommended to add a udev rule to allow hdc to access the corresponding device without needing to run hdc as root.

This stackoverflow answer also applies to hdc.

Run lsusb and check the vendor id of your device, and then create the corresponding udev rule.

Please note that your user should be a member of the group you specify with GROUP="xxx".

Depending on your Linux distributions you may want to use a different group.

To check if hdc is now working, you can run hdc list targets and it should show your device serial number.

If it doesn’t work, try rebooting.

Please note that your device needs to be in “Developer mode” with USB debugging enabled. The process here is exactly the same as one android:

- Tap the build number multiple times to enable developer mode.

- Then navigate to the developer options and enable USB debugging.

- When you connect your device for the first time, confirm the pop-up asking you if you want to trust the computer you are connecting to.

Signing configuration

Most devices require that the HAP is digitally signed by the developer to be able to install it.

When using the hvigor tool, this can be accomplished by setting a static signingConfigs object in the build-profile.json5 file or by dynamically creating the signingConfigs array on the application context object in the hvigorfile.ts script.

The signingConfigs property is an array of objects with the following structure:

{

"name": "default",

"type": "<OpenHarmony or HarmonyOS>",

"material": {

"certpath": "/path/to/app-signing-certificate.cer",

"storePassword": "<encrypted password>",

"keyAlias": "debugKey",

"keyPassword": "<encrypted password>",

"profile": "/path/to/signed-profile-certificate.p7b",

"signAlg": "SHA256withECDSA",

"storeFile": "/path/to/java-keystore-file.p12"

}

}

Here <encrypted password> is a hexadecimal string representation of the plaintext password after being encrypted.

The key and salt used to encrypt the passwords are generated by DevEco Studio IDE and are stored on-disk alongside the certificate files and keystore, usually under <USER HOME>/.ohos/config/openharmony.

You can use the IDE to generate the information needed for password encryption, the required application and profile certificate files, and the keystore itself.

- Open Project Structure dialog from

File > Project Structuremenu. - Under the ‘Signing Config’ tab, enable the ‘Automatically generate signature’ checkbox.

NOTE: The signature autogenerated above is intended only for development and testing. For production builds and distribution via an App Store, the relevant configuration needs to be obtained from the App Store provider.

Once generated, it is necessary to point mach to the above “signing material” configuration using the SERVO_OHOS_SIGNING_CONFIG environment variable.

The value of the variable must be a file path to a valid .json file with the same structure as the signingConfigs property given above, but with certPath, storeFile and profile given as paths relative to the json file, instead of absolute paths.

Building servoshell

Before building servo you will need to set some environment variables.

direnv is a convenient tool that can automatically set these variables based on an .envrc file, but you can also use any other method to set the required environment variables.

.envrc:

export OHOS_SDK_NATIVE=/path/to/openharmony-sdk/platform/api-version/native

# Required if the HAP must be signed. See the signing configuration section above.

export SERVO_OHOS_SIGNING_CONFIG=/path/to/signing-configs.json

# Required only when building for HarmonyOS:

export DEVECO_SDK_HOME=/path/to/command-line-tools/sdk # OR /path/to/DevEcoStudio/sdk OR on MacOS /Applications/DevEco-Studio.app/Contents/sdk

# Required only when building for OpenHarmony:

# Note: The openharmony sdk is under ${DEVECO_SDK_HOME}/default/openharmony

# Presumably you would need to replicate this directory structure

export OHOS_BASE_SDK_HOME=/path/to/openharmony-sdk/platform

# If you have the command-line tools installed:

export PATH="${PATH}:/path/to/command-line-tools/bin/"

export NODE_HOME=/path/to/command-line-tools/tool/node

# Alternatively, if you do NOT have the command-line tools installed:

export HVIGOR_PATH=/path/to/parent/directory/containing/node_modules # Not required if `hvigorw` is in $PATH

If you use direnv and an .envrc file, please remember to run direnv allow . after modifying the .envrc file.

Otherwise, the environment variables will not be loaded.

The following command can then be used to compile the servoshell application for a 64-bit ARM device or emulator:

./mach build --ohos --release [--flavor=harmonyos]

In mach build, mach install and mach package commands, --ohos is an alias for --target aarch64-unknown-linux-ohos.

To build for an emulator running on an x86-64 host, use --target x86_64-unknown-linux-ohos.

The default ohos build / package / install targets OpenHarmony.

If you want to build for HarmonyOS you can add --flavor=harmonyos.

Please check the Signing configuration and add a configuration with "name": "hos" and "type": "HarmonyOS"" and the respective signing certificates.

Installing and running on-device

The following command can be used to install previously built servoshell application on a 64-bit ARM device or emulator:

./mach install --ohos --release [--flavor=harmonyos]

Further reading

Troubleshooting

Be sure to look at the General Troubleshooting section if you have trouble with your build.

General Troubleshooting

See the style guide for how to format error messages.

If you run into trouble building Servo and you do not see your error listed on any other page, first try following the steps below:

- Ensure that you have all of the listed build requirements. In particular, if you are using an uncommon Linux distribution or some other kind of Unix, you may need to determine what the correct name of a particular dependency is on your system.

- Double-check that build requirements are installed and check dependency versions.

- Run

./mach boostrapor.\mach boostrapon Windows. Sometimes the tools or dependencies needed to build Servo will change. It is safe to run this command more than once. Ensure that no errors were reported during execution. If you have installed other dependencies manually you may need to run./mach bootstrap --skip-platform. - Refresh your environment. This may involve:

- Restarting your shell

- Logging out and logging back in

- Restarting your computer

Dependency versions

curl --versionshould print a version like 7.83.1 or 8.4.0- On Windows, type

curl.exe --versioninstead, to avoid getting the PowerShell alias forInvoke-WebRequest

- On Windows, type

uv --versionshould print 0.4.30 or newer- Servo’s

machbuild tool depends onuvto provision a pinned version of Python (set by the.python-versionfile in the repo) and create a local virtual environment (.venvdirectory) into which the python dependency modules are installed. - If the system already has an installation of the required Python version, then

uvwill just symlink to that installation to save disk space. - If the versions do not match or no Python installation is present on the host, then

uvwill download the required binaries. - Using an externally managed Python installation for executing

machas a Python script is currently not supported.

- Servo’s

rustup --versionshould print a version like 1.26.0- Windows:

choco --versionshould print a version like 2.2.2 - macOS:

brew --versionshould print a version like 4.2.17

You are not alone!

If you have problems building Servo that you can’t solve, you can always ask for help in the build issues chat on Zulip.

Getting Started

Servo welcomes contributions from everyone. Working on a web engine can be challenging and sometimes frustrating, but it can also be very rewarding and fun. If you spend the time and effort to get involved, you will constantly be learning and growing as a developer and open source contributor. To get started, please do the following:

- Read the rest of this page for basic information about contributing to Servo.

- Successfully fetch the Servo repository and build Servo.

- Set up your fork of Servo and learn the basics of using Git, following the contents of our Git Setup chapter.

- Learn a bit of Rust. There are many resources online for how to do this, but one of the best is the official Learning Rust documentation. If you are familiar with other imperative programming languages, you can learn Rust as you work more on Servo, but having a basic familiarity with the language is very useful to get started.

- Set up your editor so that it integrates with

rust-analyzer. - Read our Style Guide for expectations for the code your contribute.

- Read and following the steps for making a pull request.

Working on an issue

Should you wish to work on an issue, please leave a comment on it in order to claim the issue. This is to prevent duplicated efforts from contributors on the same issue.

Sending the message “@servo-highfive assign me” will assign the issue to you.

Head over to Servo Starters to find good tasks to start with.

If you find any unfamiliar words or jargon, please check the glossary first.

If there’s no matching entry, please make a pull request to add one with the content TODO so we can correct that!

AI contributions

Contributions must not include content generated by large language models or other probabilistic tools, including but not limited to Copilot or ChatGPT. This policy covers code, documentation, pull requests, issues, comments, and any other contributions to the Servo project.

For now, we’re taking a cautious approach to these tools due to their effects — both unknown and observed — on project health and maintenance burden. This field is evolving quickly, so we are open to revising this policy at a later date, given proposals for particular tools that mitigate these effects. Our rationale is as follows:

Maintainer burden: Reviewers depend on contributors to write and test their code before submitting it. We have found that these tools make it easy to generate large amounts of plausible-looking code that the contributor does not understand, is often untested, and does not function properly. This is a drain on the (already limited) time and energy of our reviewers.

Correctness and security: Even when code generated by AI tools does seem to function, there is no guarantee that it is correct, and no indication of what security implications it may have. A web browser engine is built to run in hostile execution environments, so all code must take into account potential security issues. Contributors play a large role in considering these issues when creating contributions, something that we cannot trust an AI tool to do.

Copyright issues: Publicly available models are trained on copyrighted content, both accidentally and intentionally, and their output often includes that content verbatim. Since the legality of this is uncertain, these contributions may violate the licenses of copyrighted works.

Ethical issues: AI tools require an unreasonable amount of energy and water to build and operate, their models are built with heavily exploited workers in unacceptable working conditions, and they are being used to undermine labor and justify layoffs. These are harms that we do not want to perpetuate, even if only indirectly.

Note that aside from generating code or other contributions, AI tools can sometimes answer questions you may have about Servo, but we’ve found that these answers are often incorrect or very misleading.

In general, do not assume that AI tools are a source of truth regarding how Servo works. Consider asking your questions on Zulip instead.

Conduct

Servo Code of Conduct is published at https://servo.org/coc/.

Governance

Servo’s governance is defined by the Technical Steering Committee (TSC) and documented in the servo/project repository.

Git Setup

If you are new to Git or distrbuted version control, it’s highly recommended that you spend some time learning the basics before continuing here. There are many incredible resources online for learning Git. For instance, the Zulip project maintains a very thorough guide to using Git for contributing to an open source project. The information there is often very relevant to working on Servo as well. Becoming proficient with Git is a skill that will come in handy in many kinds of software development tasks and it’s worth the time.

When you cloned Servo originally, the upstream Servo repository at https://github.com/servo/servo was your upstream.

You can choose to keep that configuration, but the recommneded workflow is the following:

- Fork the upstream Servo repository.

- Check out a clone of your newly-forked copy of Servo.

Note that thegit clone --depth 10 https://github.com/<username>/servo.git--depth 10arguments here throw away most of Servo’s commit history for better performance. They can be omitted. - Add a new remote named

upstreamthat points atservo/servo.git remote add upstream https://github.com/servo/servo.git

Starting a new change

When you want to work on a new change, you shouldn’t do it on the main branch as that’s where you want to keep your copy of the upstream repository.

Instead you should do your work on a branch.

- Update your main branch to the latest upstream changes.

git checkout main git pull origin main - Create a new branch based on the main branch.

git checkout -b issue-12345 - Make your changes and commit them on that branch.

Don’t forget to sign off on each commit, too!

git commit --signoff -m "script: Add a stub interface for MessagePort"

Next, you probably want to make a pull request with your changes.

Editor Setup

It is highly recommended that you set up your editor to support rust-analyzer.

Although it requires a decent amount of RAM and CPU, this will make the experience of working on Servo much better as your editor will be able to provide you with code completion, live compilation errors and warnings, and readily-accessible rustdoc.

Unfortunately, rust-analyzer tries to run cargo without mach, which will cause issues due

to the special configuration that Servo currently needs to build.

Use the following instructions to configure your IDE.

Additions are welcome here!

Visual Studio Code

It’s recommend that you add the following to your project specific settings in .vscode/settings.json:

{

"rust-analyzer.rustfmt.overrideCommand": [ "./mach", "fmt" ],

"rust-analyzer.check.overrideCommand": [

"./mach",

"clippy",

"--message-format=json",

"--target-dir",

"target/lsp",

"--features",

"tracing,tracing-perfetto"

],

"rust-analyzer.cargo.buildScripts.overrideCommand": [

"./mach",

"clippy",

"--message-format=json",

"--target-dir",

"target/lsp",

"--features",

"tracing,tracing-perfetto" ],

}

Notes:

- In the above excerpt, the language server is building into its own target directory,

target/lsp, in order to avoid unwanted rebuilds. If you would like to save disk space you can remove the--target-dirandtarget/lsparguments and the default target directory will be used. - To enable optional build settings, simply add them to the build argument list in your configuration file.

- Windows: If you are on Windows, you will need to use

./mach.batinstead of just./mach. If you do not, you may receive an error saying that the command executed is not a valid Win32 application. - Cross-compilation: If you are cross-compiling, you can override the target used for cargo by adding

"rust-analyzer.cargo.target": "aarch64-linux-android". Note that some LSP features might not work in this configuration.

Zed

If you are using Zed, you must do something very similar to what is described for Visual Studio Code, but the Zed configuration file expects a slightly different syntax.

In your ./zed/settings.json file you need something like this:

{

"lsp": {

"rust-analyzer": {

"initialization_options": {

"checkOnSave": true,

"check": {

"overrideCommand": [

"./mach",

"clippy",

"--message-format=json",

"--target-dir",

"target/lsp",

"--feature",

"tracing,tracing-perfetto"

]

},

"cargo": {

"allTargets": false,

"buildScripts": {

"overrideCommand": [

"./mach",

"clippy",

"--message-format=json",

"--target-dir",

"target/lsp",

"--feature",

"tracing,tracing-perfetto"

]

}

},

"rustfmt": {

"extraArgs": [

"--config",

"unstable_features=true",

"--config",

"binop_separator=Back",

"--config",

"imports_granularity=Module",

"--config",

"group_imports=StdExternalCrate"

]

}

}

}

}

}

NixOS

If you are on NixOS, you might get errors about pkg-config or crown:

thread ‘main’ panicked at ’called `Result::unwrap()` on an `Err` value: “Could not run `PKG_CONFIG_ALLOW_SYSTEM_CFLAGS=\“1\” PKG_CONFIG_ALLOW_SYSTEM_LIBS=\“1\” \“pkg-config\” \“–libs\” \“–cflags\” \“fontconfig\”`

[ERROR rust_analyzer::main_loop] FetchWorkspaceError: rust-analyzer failed to load workspace: Failed to load the project at /path/to/servo/Cargo.toml: Failed to read Cargo metadata from Cargo.toml file /path/to/servo/Cargo.toml, Some(Version { major: 1, minor: 74, patch: 1 }): Failed to run `cd “/path/to/servo” && “cargo” “metadata” “–format-version” “1” “–manifest-path” “/path/to/servo/Cargo.toml” “–filter-platform” “x86_64-unknown-linux-gnu”`: `cargo metadata` exited with an error: error: could not execute process `crown -vV` (never executed)

mach passes different RUSTFLAGS to the Rust compiler than plain cargo, so if you try to build Servo with cargo, it will undo all the work done by mach (and vice versa).

Because of this, and because Servo can currently only be built with mach, you need to configure the rust-analyzer extension to use mach in .vscode/settings.json:

Using crown

If you are using --use-crown, you should also set CARGO_BUILD_RUSTC in .vscode/settings.json as follows, where /nix/store/.../crown is the output of nix-shell --run 'command -v crown'.

{

"rust-analyzer.server.extraEnv": {

"CARGO_BUILD_RUSTC": "/nix/store/.../crown",

},

}

These settings should be enough to not need to run code . from within a nix-shell, but it wouldn’t hurt to try that if you still have problems.

Problems with proc macros

When enabling rust-analyzer’s proc macro support, you may start to see errors like

proc macro `MallocSizeOf` not expanded: Cannot create expander for /path/to/servo/target/debug/deps/libfoo-0781e5a02b945749.so: unsupported ABI `rustc 1.69.0-nightly (dc1d9d50f 2023-01-31)` rust-analyzer(unresolved-proc-macro)

This means rust-analyzer is using the wrong proc macro server, and you will need to configure the correct one manually. Use mach to query the current sysroot path, and copy the last line of output:

$ ./mach rustc --print sysroot

NOTE: Entering nix-shell /path/to/servo/shell.nix

info: component 'llvm-tools' for target 'x86_64-unknown-linux-gnu' is up to date

/home/me/.rustup/toolchains/nightly-2023-02-01-x86_64-unknown-linux-gnu

Then configure either your sysroot path or proc macro server path in .vscode/settings.json:

{

"rust-analyzer.procMacro.enable": true,

"rust-analyzer.cargo.sysroot": "[paste what you copied]",

"rust-analyzer.procMacro.server": "[paste what you copied]/libexec/rust-analyzer-proc-macro-srv",

}

Style Guide

The majority of our style recommendations are automatically enforced via our automated linters. This document has guidelines that are less easy to lint for.

Lots of code in Servo was written before these recommendations were agreed upon. We welcome pull requests to bring project code up to date with the modern guidelines.

Rust

In general, all Rust code in Servo is automatically formatted via rustfmt when you run ./mach fmt, which should align your code with the official Rust Style Guide.

In addition, Rust code should use Rust API naming conventions.

There are a few non-obvious points in the naming conventions guide such as:

- The

get_prefix is generally not used for getters in Rust code. An exception to this rule is when it is used for a variant of aget()method like instd::cell::Cell::get_mut(). - When camel-casing, acronyms and contractions of compound words count as one word.

For instance, a struct should be called

HtmlParserand notHTMLParser.

Indentation

In order to make the flow of complicated functions and methods easier to follow, we try to minimize indentation by using early returns.

This also matches the language used in specifications in many cases.

When the logic of a function reaches 2 or 3 levels of indentation or when a short conditional block is an exceptional case that drops to the bottom of a long function, you should consider using an early return.

Early returns work very well when combined with the necessity to unwrap Options or enum variants.

Prefer the following syntax when returning early when an Option value is None:

#![allow(unused)]

fn main() {

let Some(inner_value) = option_value else {

return

};

}This also works for enum variants as well:

#![allow(unused)]

fn main() {

enum Pet {

Dog(usize),

Cat(usize),

}

let pet = Pet::Dog(10);

let Pet::Dog(age) = pet else {

return;

};

}Abbreviation

Servo follows the Google C++ guide rules for naming. Avoid abbreviations that would not be known to someone outside of the project. Do not abbreviate by deleting letters in the middle of words.

Exception::

You may use some universally-known abbreviations, such as the use of i for a loop index.

You may also use single letters such as T for Rust type parameters.

Dead code

In almost all cases, do not commit dead code or commented out code to the repository. Commented out code can bit rot easily and dead code is not tested. When code becomes dead because it is completely unused, it should be removed.

Exception:

An exception for this case is when code is dead only one in some compilation configurations.

In that case, you use an expect(dead_code) compiler directive with a configuration qualifier.

For example:

#![allow(unused)]

fn main() {

#[cfg_attr(any(target_os = "android", target_env = "ohos"), expect(dead_code))]

pub(crate) const LINE_HEIGHT: f32 = 76.0;

}In this case, the LINE_HEIGHT constant is compiled, but expected to be dead when compiling for Android or OpenHarmony.

unsafe code

Try to avoid unsafe code.

Unfortunately, a web engine is complicated so some amount of unsafe code is inevitable in Servo.

In the case that you have to make an unsafe function, use Safety comments, which explain why a block is safe and what the safety invariants are.

Use good judgement about when to add safety comments to unsafe blocks inside of functions.

Assertions

When Servo’s internal logic should make a certain condition impossible, use an assert! or debug_assert! statement to ensure that is the case.

You should think of assert! as both a kind of a test and a kind of documentation.

If the invariant expressed in the assertion ever becomes false, Servo might begin to crash when running tests, preventing the introduction or logic errors.

In addition, people reading the code can know what the author presumed to be true at a given point in the code in a stronger way than a comment allows.

If a particular part of a code is unreachable, for instance if an enum variant was handled before and shouldn’t be encountered later in the same function, use unreachable!(), but always filling the text with why the code is unreachable.

unwrap() and expect()

unwrap on Option or Result should almost never be used.

Instead, handle the None or Err case, doing any necessary error handling.

Servo should not crash, when possible.

If None or an Err is impossible due to the logic of Servo-internal code that does not involve external input or crates, then you can use expect() like you would use an assertion.

The text passed as an argument to expect() should express why the value cannot be None or Err.

Exception::

When dealing with Rust’s std::sync::Mutexor other concurrency primitives which use poisoning, it is appropriate to use unwrap.

todo!() and unimplemented!()

In code that is reachable via execution, do not use todo! or unimplemented!.

These macros will cause Servo to panic and normal web content shouldn’t cause Servo to panic.

Intead, try to make these kind of cases unreachable and return proper error values or simply have the code do nothing instead.

Shell scripts

Shell scripts are suitable for small tasks or wrappers, but it’s preferable to use Python for anything with a hint of complexity or in general.

Shell scripts should be written using bash, starting with this shebang:

#!/usr/bin/env bash

Note that the version of bash available on macOS by default is quite old, so be careful when using new features.

Scripts should enable a few options at the top for robustness:

set -o errexit

set -o nounset

set -o pipefail

Remember to quote all variables, using the full form: "${SOME_VARIABLE}".

Use "$(some-command)" instead of backticks for command substitution.

Note that these should be quoted as well.

Servo Book

- Use permalinks when linking to source code repos — press

Yin GitHub to get a permanent URL

Markdown source

- Use one sentence per line with no column limit, to make diffs and history easier to understand

To help split sentences onto separate lines, you can replace ([.!?]) → $1\n, but watch out for cases like “e.g.”.

Then to fix indentation of simple lists, you can replace ^([*-] .*\n([* -].*\n)*)([^\n* -]) → $1 $3, but this won’t work for nested or more complex lists.

- For consistency, indent nested lists with two spaces, and use

-for unordered lists

Notation

- Use bold text when referring to UI elements like menu options, e.g. “click Inspect”

- Use

backtickswhen referring to single-letter keyboard keys, e.g. “pressAor Ctrl+A”

Error messages

- Where possible, always include a link to documentation, Zulip chat, or source code — this helps preserve the original context, and helps us check and update our advice over time

The remaining rules for error messages are designed to ensure that the text is as readable as possible, and that the reader can paste their error message into find-in-page with minimal false negatives, without the rules being too cumbersome to follow.

Wrap the error message in <pre><samp>, with <pre> at the start of the line (not indented).

If you want to style the error message as a quote, wrap it in <pre><blockquote><samp>.

<pre> treats newlines as line breaks, and at the start of the line, it prevents Markdown syntax from accidentally taking effect when there are blank lines in the error message.

<samp> marks the text as computer output, where we have CSS that makes it wrap like it would in a terminal.

Code blocks (<pre><code>) don’t wrap, so they can make long errors hard to read.

Replace every & with &, then replace every < with <.

Text inside <pre> will never be treated as Markdown, but it’s still HTML markup, so it needs to be escaped.

Always check the rendered output to ensure that all of the symbols were preserved.

You may find that you still need to escape some Markdown with \, to avoid rendering called `Result::unwrap()` on an `Err` value as called Result::unwrap() on an Err value.

| Error message | Markdown |

|---|---|

thread 'main' panicked at 'called `Result::unwrap()` on an `Err` value: "Could not run `PKG_CONFIG_ALLOW_SYSTEM_CFLAGS=\"1\" PKG_CONFIG_ALLOW_SYSTEM_LIBS=\"1\" \"pkg-config\" \"--libs\" \"--cflags\" \"fontconfig\"` |

|

error[E0765]: ...

--> src/main.rs:2:14

|

2 | println!("```);

| ______________^

3 | | }

| |__^

|

|

Making a Pull Request

Contributions to Servo or its dependencies should be made in the form of GitHub pull requests. Each pull request will be reviewed by a core contributor (someone with permission to land patches) and either landed in the main tree or given feedback for changes that would be required. All contributions should follow this format, even those from core contributors.

Pull request checklist

-

Branch from the main branch and, if necessary, rebase your branch to main before submitting your pull request. If it doesn’t merge cleanly with main you may be asked to rebase your changes.

-

Run

./mach fmtand./mach test-tidyon your change. -

Commits should be as small as possible, while ensuring that each commit is correct independently (i.e., each commit should compile and pass tests).

-

Commits should be accompanied by a Developer Certificate of Origin sign-off, which indicates that you (and your employer if applicable) agree to be bound by the terms of the project license. In git, this is the

-soption togit commit. -

If your patch is not getting reviewed, or you need a specific person to review it, you can @-reply a reviewer asking for a review in the pull request or a comment, or you can ask for a review in the Servo chat.

-

Add tests relevant to the fixed bug or new feature. For a DOM change this will usually be a web platform test; for layout, a reftest. See our testing guide for more information.

Opening a pull request

First, push your branch to your origin remote.

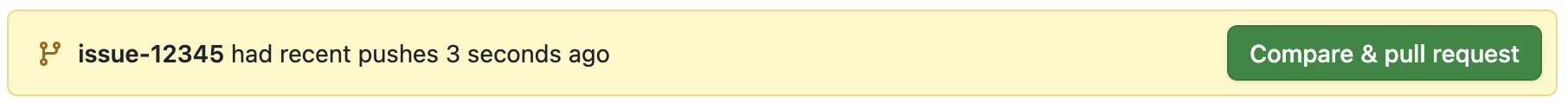

Next, either open the URL that is returned by a successful push to start the pull request,

or visit your Servo fork in your web browser and follow the UI prompts.

jdm@pathfinder servo % git push origin issue-12345

Enumerating objects: 43, done.

Counting objects: 100% (43/43), done.

Delta compression using up to 12 threads

Compressing objects: 100% (29/29), done.

Writing objects: 100% (29/29), 5.13 KiB | 5.13 MiB/s, done.

Total 29 (delta 25), reused 0 (delta 0), pack-reused 0

remote: Resolving deltas: 100% (25/25), completed with 14 local objects.

remote:

remote: Create a pull request for 'issue-12345' on GitHub by visiting:

remote: https://github.com/jdm/servo/pull/new/issue-12345

remote:

Title and description

In Servo, commits in pull requests are squashed and the pull request title and description are used to create the final commit message (example). It’s important that the information in both the title and description fully describes the change, as it makes the commit useful for those looking at the repository history and makes Servo a healthier project as a whole.

The title should succinctly describe what a change does. Some tips:

- Prefix the title with the lower-case name of the crate you are working on. For example, if the change modifies code in the “script” crate, prefix the title with “script: “. If a change affects multiple crates, identify which crate is the primary “source” of the change or just omit the prefix.

- Titles should be written as an imperative (a request or command) sentence with a verb. For instance “layout: Skip box tree construction when possible.” Try to avoid generic verbs such as “fix”, “correct”, or “improve” and instead describe what the fix does. Code can be fixed multiple times, but the message should more uniquely identify the change.

- Prefixes should be lower-case and the rest of the title should only capitalize the first word and proper nouns (such as the name of data structures or specification concepts such as WebDriver). When in doubt follow what is written in the specification. Please do not use “Title Casing for Commit Messages.”

The commit description should:

- Describe the original problem or situation (perhaps linking to any open bugs).

- Describe how the change fixes the problem, improves the code, or prepares for some followup change.

- Explain any caveats with the change, such as newly failing tests, performance degradation, or uncovered edge cases. Discuss how these can be addressed in the future.

- Be written in multiple sentence paragraphs of text with either consistent wrapping (80 characters or less) or no wrapping at all (as GitHub will do this automatically).

The default pull request template includes several prompts; please fill them out by replacing the original instructions.

The “Testing” prompt is particularly important, since it helps streamline the review process. It asks:

- Are there already automated tests that cover the code that is being changed?

- If not, does this pull request include new automated tests?

- If not, what prevents adding at least one new test?

If you don’t know the answer, please write that down! It can always be rewritten later based on the reviewer feedback.

Addressing review comments

Prefer appending new commits to your branch over amending existing commits. This makes it easier for Servo reviewers to only look at the changes, which helps pull requests get reviewed more quickly.

git commit -s -m "script: Fix deadlock with cross-origin iframes."

git push origin issue-12345

Addressing merge conflicts

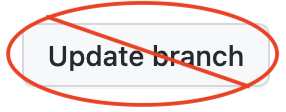

When a pull request has merge conflicts, the two most common ways to address them are merging and rebasing. Please do not press the “Update branch” button on the pull request; this performs a merge and will prevent some Servo CI functionality from working correctly.

Instead, first update your local main branch, then rebase your feature branch on top of the main branch, then force push the changes.

git checkout issue-12345

git pull --rebase upstream main

git push -f origin issue-12345

When a rebase encounters conflicts, you will need to address them:

jdm@pathfinder servo % git rebase main issue-12345

Auto-merging components/script/dom/bindings/root.rs

CONFLICT (content): Merge conflict in components/script/dom/bindings/root.rs

Auto-merging components/script/dom/node.rs

error: could not apply 932c8d3e97d... script: Remove unused field from nodes.

hint: Resolve all conflicts manually, mark them as resolved with

hint: "git add/rm <conflicted_files>", then run "git rebase --continue".

hint: You can instead skip this commit: run "git rebase --skip".

hint: To abort and get back to the state before "git rebase", run "git rebase --abort".

Please follow the GitHub documentation for resolving conflicts.

Running tests in pull requests

When you push to a pull request, GitHub automatically checks that your changes have no compilation, lint, or tidy errors.

To run unit tests or Web Platform Tests against a pull request, add one or more of the labels below to your pull request. If you do not have permission to add labels to your pull request, add a comment on your bug requesting that they be added.

| Label | Runs unit tests on | Runs web tests on |

|---|---|---|

T-full | All platforms | Linux |

T-linux-wpt | Linux | Linux |

T-macos | macOS | (none) |

T-windows | Windows | (none) |

Testing

This is boring. But your PR won’t get accepted without a test.

There are three types of tests in servo.

- Code formatting tests (

./mach test-tidy). - Unit tests (

./mach test-unit). - Integration tests (

./mach test-wpt).

The focus of this document will be on the Integration tests but we want to mention the other tests first.

Every submission has to confirm to the code formatting tests. Most of the formatting can be done

automatically with ./mach fmt.

The unit tests are in various files throughout the code base in typical rust #[cfg(test)] and

with #[test] annotations. You can run unit tests with ./mach test-unit. Example calls:

# Run all unit tests in the net crate

./mach test-unit -p net

# Run a specific unit test in the net crate

./mach test-unit -p net test_fetch_response_is_not_network_error

Running the whole test-suite can be very memory intensive, you can dampen this behaviour somewhat with

with restricting the amount of compiled crates with ./mach test-unit -j 4.

Integration Tests

Tests are located in the tests directory.

You’ll see that there are a lot of files in there, so finding the proper location for your test is not always obvious.

First, look at the “Testing” section in ./mach --help to understand the different test categories.

You’ll also find some update-* commands.

It’s used to update the list of expected results.

To run a test:

./mach test-wpt tests/wpt/yourtest

Add a new test

If you need to create a new test file, it should be located in tests/wpt/mozilla/tests or in tests/wpt/tests if it’s something that doesn’t depend on servo-only features.

You’ll then need to update the list of tests and the list of expected results:

./mach test-wpt --manifest-update

Debugging a test

See the debugging guide to get started in how to debug Servo.

Web Platform Tests (tests/wpt)

This folder contains the Web Platform Tests and the code required to integrate them with Servo. In addition, there are WebGPU and WebGL tests which are imported and converted into Web Platform Test style tests.

Contents of tests/wpt

In particular, this folder contains:

config.ini: some configuration for the Web Platform Testsinclude.ini: the subset of Web Platform Tests we currently runtests: In-tree copy of the Web Platform Testsmeta: expected failures for the Web Platform tests we runmozilla: Web Platform Test-style tests that cannot be upstreamedwebgl: The imported WebGL testswebgpu: The imported WebGPU tests (See WebGPU chapter for more information)

Running the Web Platform Tests

The simplest way to run the Web Platform Tests in Servo is ./mach test-wpt in the root directory.

This will run the subset of JavaScript tests defined in include.ini and log the output to stdout.

A subset of tests may be run by providing positional arguments to the mach command, either as filesystem paths or as test urls e.g.

./mach test-wpt tests/wpt/tests/dom/historical.html

to run the dom/historical.html test, or

./mach test-wpt dom

to run all the DOM tests.

There are also a large number of command line options accepted by the test harness; these are documented by running with --help.

Running the WPT tests with a debug build often results in timeouts.

Instead, consider building with mach build -r and testing with mach test-wpt -r.

Running Web Platform Tests on your GitHub fork

Alternatively, you can execute the tests on GitHub-hosted runners using mach try.

Usually, mach try linux-wpt (all tests, linux) will be enough.

You can view the run results in your fork under the “Actions” tab. Any failed tasks will include a list of stable unexpected results at the bottom of the log. Unexpected results that are known-intermittent can likely be ignored.

When opening a PR, you can include a link to the run. Otherwise, reviewers will run the tests again.

Running the Web Platform Tests with an external server

Normally wptrunner starts its own WPT server, but occasionally you might want to run multiple instances of mach test-wpt, such as when debugging one test while running the full suite in the background, or when running a single test many times in parallel (–processes only works across different tests).

This would lead to a “Failed to start HTTP server” errors, because you can only run one WPT server at a time. To fix this:

- Follow the steps in Running web tests manually

- Add a

breakto start_servers in serve.py as follows:

--- a/tests/wpt/tests/tools/serve/serve.py

+++ b/tests/wpt/tests/tools/serve/serve.py

@@ -746,6 +746,7 @@ def start_servers(logger, host, ports, paths, routes, bind_address, config,

mp_context, log_handlers, **kwargs):

servers = defaultdict(list)

for scheme, ports in ports.items():

+ break

assert len(ports) == {"http": 2, "https": 2}.get(scheme, 1)

# If trying to start HTTP/2.0 server, check compatibility

- Run

mach test-wptas many times as needed

If you get unexpected TIMEOUT in testharness tests, then the custom testharnessreport.js may have been installed incorrectly (see Running web tests manually for more details).

Running Web Platform Tests manually

(See also the relevant section of the upstream README.)

It can be useful to run a test without the interference of the test runner, for example when using a debugger such as gdb.

To do this, we need to start the WPT server manually, which requires some extra configuration.

To do this, first add the following to the system’s hosts file:

127.0.0.1 www.web-platform.test

127.0.0.1 www1.web-platform.test

127.0.0.1 www2.web-platform.test

127.0.0.1 web-platform.test

127.0.0.1 xn--n8j6ds53lwwkrqhv28a.web-platform.test

127.0.0.1 xn--lve-6lad.web-platform.test

Navigate to tests/wpt/web-platform-tests for the remainder of this section.

Normally wptrunner installs Servo’s version of testharnessreport.js, but when starting the WPT server manually, we get the default version, which won’t report test results correctly. To fix this:

- Create a directory

local-resources - Copy

tools/wptrunner/wptrunner/testharnessreport-servo.jstolocal-resources/testharnessreport.js - Edit

local-resources/testharnessreport.jsto substitute the variables as follows:

%(output)d- →

1if you want to play with the test interactively (≈ pause-after-test) - →

0if you don’t care about that (though it’s also ok to use1always)

- →

%(debug)s→true

- Create a

./config.jsonas follows (seetools/wave/config.default.jsonfor defaults):

{"aliases": [{

"url-path": "/resources/testharnessreport.js",

"local-dir": "local-resources"

}]}

Then start the server with ./wpt serve.

To check if testharnessreport.js was installed correctly:

- The standard output of

curl http://web-platform.test:8000/resources/testharnessreport.jsshould look like testharnessreport-servo.js, not like the default testharnessreport.js - The standard output of

target/release/servo http://web-platform.test:8000/css/css-pseudo/highlight-pseudos-computed.html(or any testharness test) should contain lines starting with:TEST STARTTEST STEPTEST DONEALERT: RESULT:

To prevent browser SSL warnings when running HTTPS tests locally, you will need to run Servo with --certificate-path resources/cert-wpt-only.

Running the Web Platform Tests in Firefox

When working with tests, you may want to compare Servo’s result with Firefox.

You can supply --product firefox along with the path to a Firefox binary (as well as few more odds and ends) to run tests in Firefox from your Servo checkout:

GECKO="$HOME/projects/mozilla/gecko"

GECKO_BINS="$GECKO/obj-firefox-release-artifact/dist/Nightly.app/Contents/MacOS"

./mach test-wpt dom --product firefox --binary $GECKO_BINS/firefox --certutil-binary $GECKO_BINS/certutil --prefs-root $GECKO/testing/profiles

Updating Web Platform Test expectations

When fixing a bug that causes the result of a test to change, the expected results for that test need to be changed.

This can be done manually, by editing the .ini file under the meta folder that corresponds to the test.

In this case, remove the references to tests whose expectation is now PASS, and remove .ini files that no longer contain any expectations.

When a larger number of changes is required, this process can be automated.

This first requires saving the raw, unformatted log from a test run, for example by running ./mach test-wpt --log-raw /tmp/servo.log.

Once the log is saved, run from the root directory:

./mach update-wpt /tmp/servo.log

Running all Web Platform Tests locally will take a long time and often cause unrelated failures (such as the runner exceeding the maximum number of open files on your system). The test expectations are also set based on the results of Servo’s CI machines, so differences in your setup might cause failures.

Usually you will have a rough idea where tests for your changes are.

For example, almost all tests for SubtleCrypto code are in the WebCryptoAPI directory.

In this case you can run only these tests with ./mach test-wpt WebCryptoAPI, followed by ./mach update-wpt as described above.

To ensure that other tests didn’t break, do a try run afterwards.

For updating WebGPU expectations, please see WebGPU chapter for more information.

Modifying Web Platform Tests

Please see the Web Platform Test Writing Tests guide for how to write tests. In general, a good way to start is to follow these steps:

- Find the directory that tests the feature you want to test.

- Find a test in that directory that tests a similar behavior, make a copy of the test with an appropriate name, and make your modifications.

- If the test is a reference test, you should also copy the reference if it needs to change, following the naming pattern of the directory you are modifying.

- Run

./mach update-manifestto update the WPT manifest with the new tests.

You must also run ./mach update-manifest whenever you modify a test, reference, or support file.

All changes to our in-tree Web Platform Tests will be upstreamed automatically when your PR is merged.

Servo-specific tests

The mozilla directory contains tests that cannot be upstreamed for some reason (e.g. because they depend on Servo-specific APIs), as well as some legacy tests that should be upstreamed at some point.

When run they are mounted on the server under /_mozilla/.

Analyzing reftest results

Reftest results can be analyzed from a raw log file.

To generate this run with the --log-raw option e.g.

./mach test-wpt --log-raw wpt.log

This file can then be fed into the reftest analyzer which will show all failing tests (not just those with unexpected results). Note that this ingests logs in a different format to original version of the tool written for gecko reftests.

The reftest analyzer allows pixel-level comparison of the test and reference screenshots.

Tests that both fail and have an unexpected result are marked with a !.

Updating the WPT manifest

MANIFEST.json can be regenerated automatically with the mach command update-manifest e.g.

./mach update-manifest

This is equivalent to running

./mach test-wpt --manifest-update SKIP_TESTS

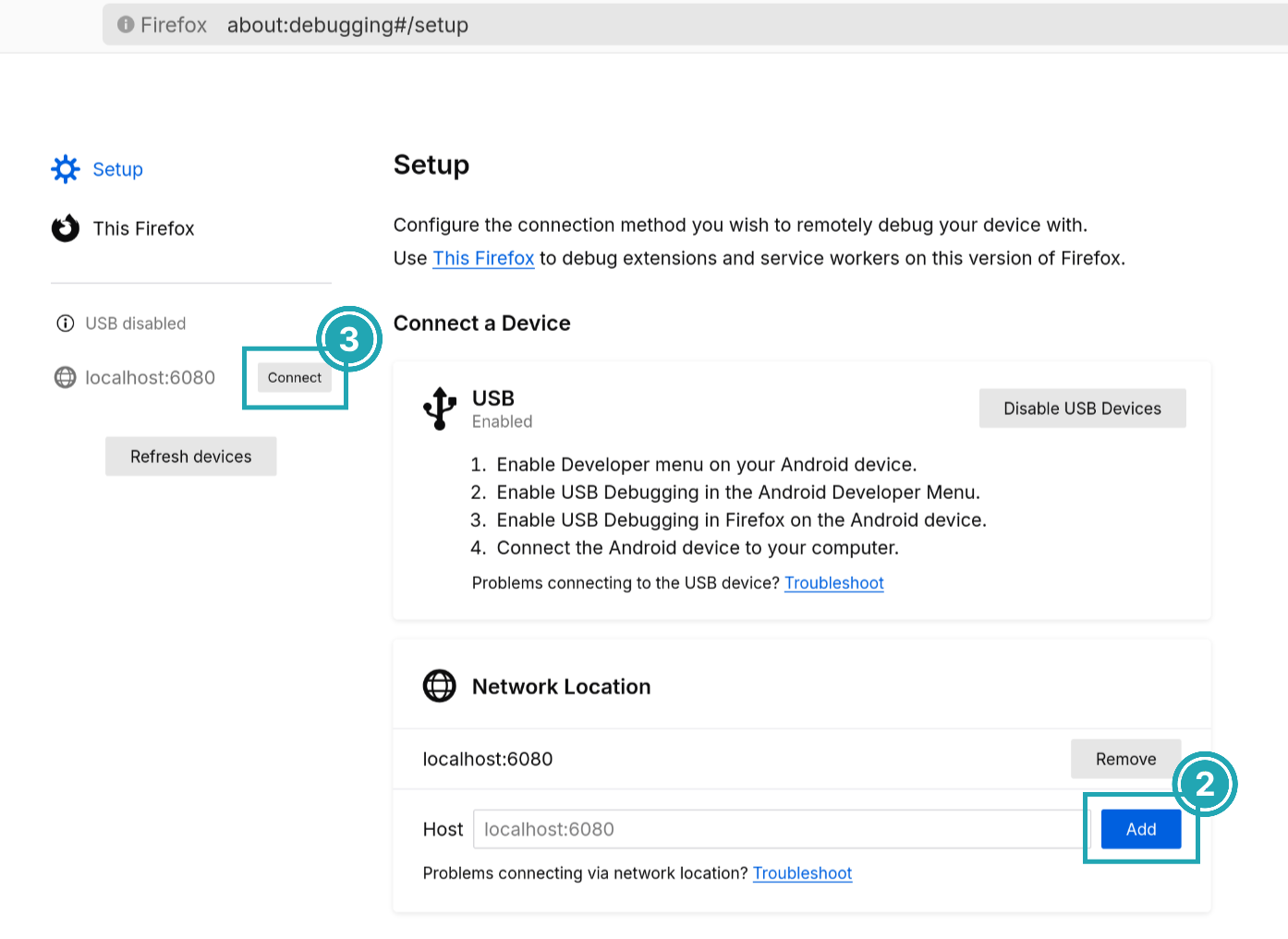

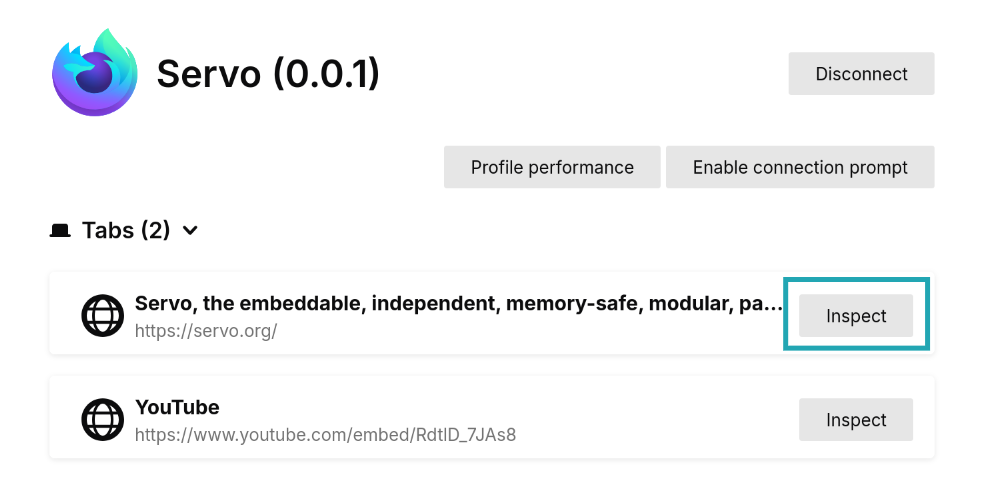

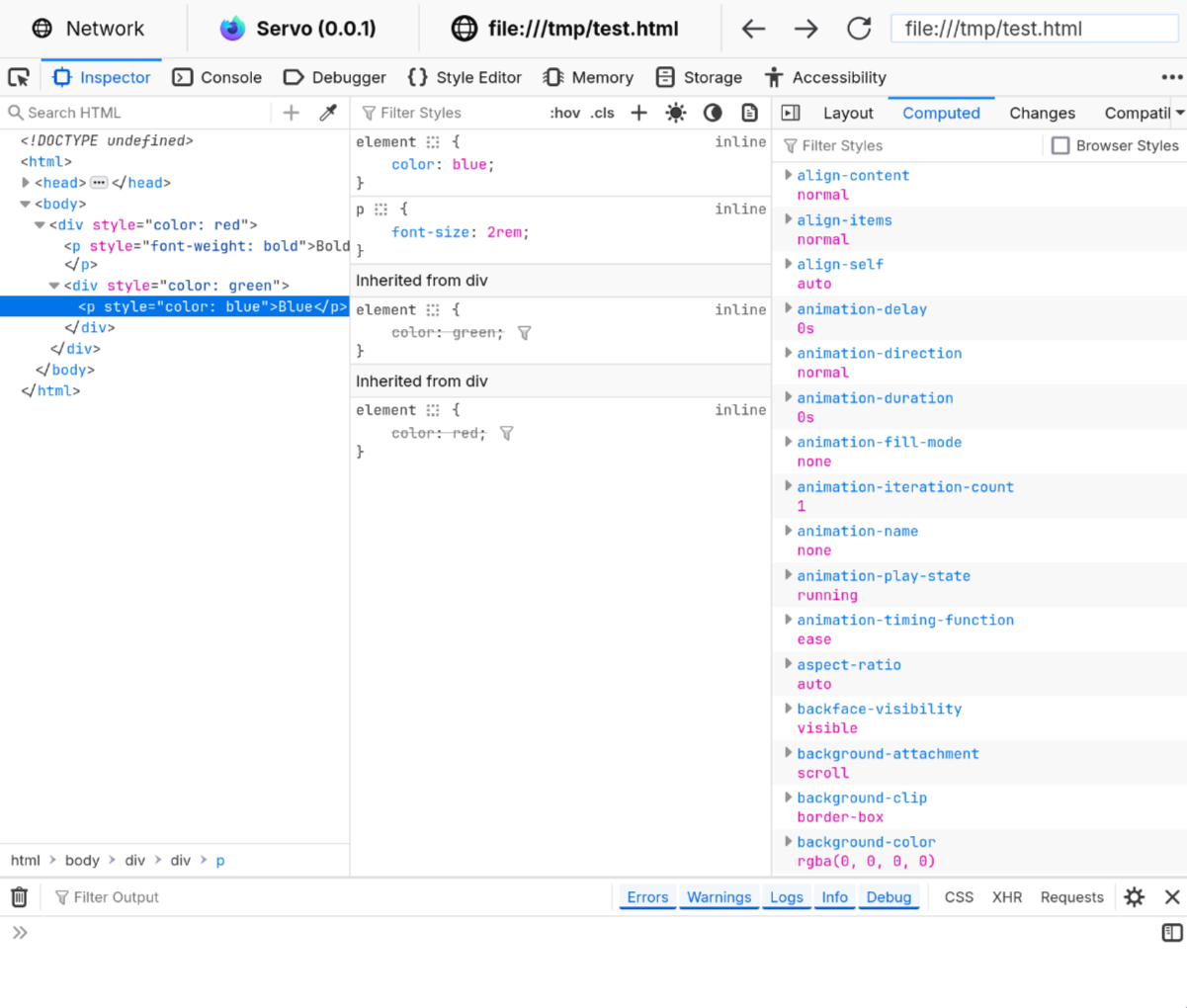

Running Devtools Tests

The simplest way to run the Devtools tests in Servo is ./mach test-devtools from the root directory.

This will run all tests defined in devtools_tests.py. All Devtools-related test files exist in python/servo/devtools_tests.

Debugging

One of the simplest ways to debug Servo is to print interesting variables with the println!, eprintln!, or dbg! macros.

In general, these should only be used temporarily; you’ll need to remove them or convert them to proper debug logging before your pull request will be merged.

Debug logging with log and RUST_LOG

Servo uses the log crate for long-term debug logging and error messages:

#![allow(unused)]

fn main() {

log::error!("hello");

log::warn!("hello");

log::info!("hello");

log::debug!("hello");

log::trace!("hello");

}Unlike macros like println!, log adds a timestamp and tells you where the message came from:

[2024-05-01T09:07:42Z ERROR servoshell::app] hello

[2024-05-01T09:07:42Z WARN servoshell::app] hello

[2024-05-01T09:07:42Z INFO servoshell::app] hello

[2024-05-01T09:07:42Z DEBUG servoshell::app] hello